Advances in machine learning are increasing access to tools for creating realistic synthetic images of people. Researchers, journalists, and platform companies need to consider ways to mitigate harms from misuse of these tools. Deepfakes are convincingly altered videos of people generated by machine learning that have been used to impersonate and/or misrepresent. In order to facilitate conversation about their use, it is useful to disentangle some of the concepts that they encompass.

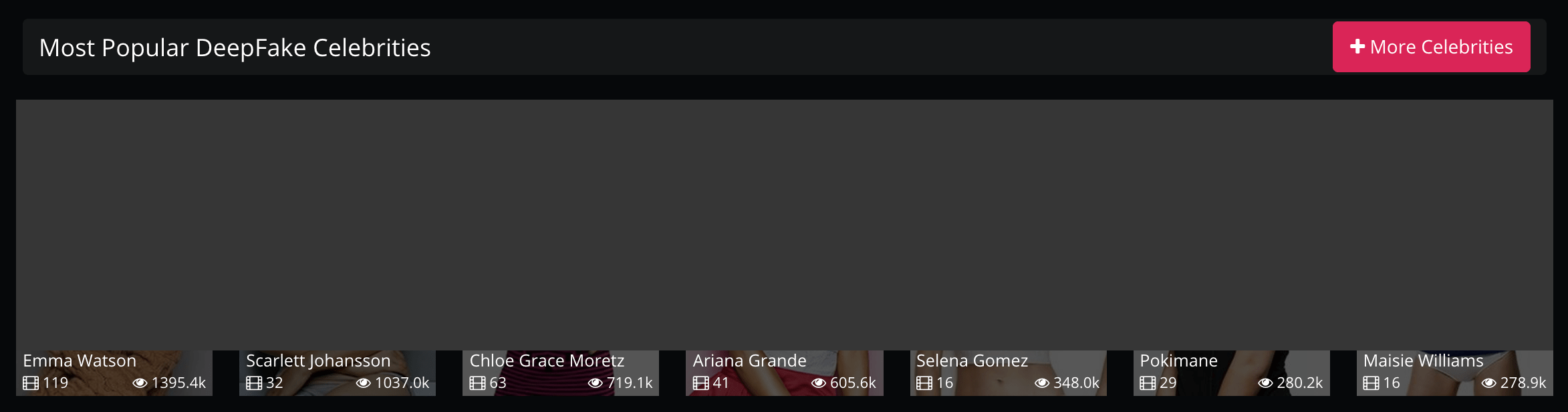

Top searches for non-consensual deepfake porn on a dedicated video-sharing website

Top searches for non-consensual deepfake porn on a dedicated video-sharing website

What do we mean by “deepfake”?

Vice first reported on deepfakes in late 2017, publicizing their use for non-consensual porn. Since then, concerns have been raised in the popular media about many other forms and uses of digital media manipulation. There exist many different methods for editing media—not all limited to video, nor involving complex machine learning. There are also different ways that these edited media can potentially do harm.

Usually, “deepfake” refers to video generated using machine learning which includes a manipulation of a real person's face. This includes face swaps (Dale et al. 2011), facial re-enactment (Thies et al. 2016), and other synthesis techniques (Liu et al. 2017).

Face swaps retain the face movements of the real video, but impose a synthetic face. This requires a target video and many source images of the face to be swapped. Non-consensual celebrity porn falls under this category.

Facial re-enactment keeps the face of the real video but imposes synthetic face movements. This technique can generate face movements driven by a number of input sources, such as a real face video (Kim et al. 2018) or an audio stream to lip sync (Suwajanakorn et al. 2017). Misinformation tends to fall under this category.

Although they are highly relevant, techniques such as audio synthesis (Jin et al. 2017) and pose synthesis (Chan et al. 2018) are usually not called deepfakes. Synthetic images of non-existent people are usually also not called deepfakes in the press.

This definition is independent of the intent of the video. Not all deepfake videos are intended to cause harm. Creators on the internet have used the technology to recreate scenes in movies and launch memes of Nic Cage.

Nic Cage faceswapped onto characters from Lord of the Rings by YouTuber derpfakes

Nic Cage faceswapped onto characters from Lord of the Rings by YouTuber derpfakes

How deepfakes do harm

Deepfakes can be thought of as potentially doing harm in two ways: as misinformation, or as misrepresentation.

In the case of misinformation, the video manipulation has deceptive effect, and societal harm comes about from its perceived truth value. For instance, in 2018 a Belgian political party circulated a fake video of Donald Trump speaking about the Paris climate agreement. Although the creators of the video claim not to have intended to produce misinformation, the viral video deceived most internet commenters. Social psychology research has suggested that political images play a role in the formation of false memories around political events (Frenda et al. 2013). Deepfakes of this nature tend to be most harmful when its subjects are public figures and the videos are widely circulated. These videos are more likely to use more sophisticated, higher fidelity video manipulation techniques because verisimilitude contributes to their success. Potential responses to this form of harm include public fact-checking performed by journalists and careful documentation of misinformation by archivists.

In the case of misrepresentation, it does not matter whether the video manipulation is taken to be true or false. Harm comes to individuals from the existence of the video without its subject's consent, which may bring about emotional or reputational harm. For instance, not only have deepfakes been used to create non-consensual porn of celebrities, but also to conduct targeted harassment campaigns at journalists like Rana Ayyub. End-user tools like FakeApp have become available, allowing non-experts to create deepfake videos. This increases the possibility of non-consenual deepfake videos being created of people who are not public figures. Platforms like Reddit, Pornhub, Discord, and Gfycat have responded to this form of harm by developing policies that ban deepfakes. However, these policies are unevenly enforced, leaving victims without much recourse. As of February 2018, Pornhub was not using AI-assisted tools to detect deepfakes, suggesting that they likely rely on responding to user flagging rather than active prevention of deepfake content uploads. This shifts some responsibility toward individual users to do platforms' moderation work, which could be problematic on platforms like Pornhub where users are not necessarily incentivized to take down deepfake videos.

How proportionally concerned should the public be about each of these possible harms? Many have pointed out that the popular narrative around deepfakes has centered on a hypothetical threat of deepfake misinformation, rather than the very real issue of non-consensual pornography.

Most of the few instances of politically motivated deepfake misinformation were widely and immediately called out as fake by journalists. Furthermore, there exist many conventional means to create video-based disinformation which would be cheaper and possibly more effective than deepfakes, like cutting out of context. For instance, in November 2018, the Press Secretary shared a video involving CNN correspondent Jim Acosta that was suspected to be altered by speeding up a segment of the video. While not false per se, many news outlets claimed the video was misleading.

While the deepfake misinformation threat has largely been hypothetical, there are active communities on the first page of Google search hosting thousands of non-consensual celebrity deepfakes. Victims range from mainstream Hollywood celebrities to K-pop stars and Youtube/Twitch streamers. There are also active messageboards associated with these websites where users request pornographic deepfakes of specific individuals. This use of deepfakes makes possible new harms that are larger in magnitude than the equivalent non-AI harms (like photoshopping a single image or making an unconvincing faceswap video).

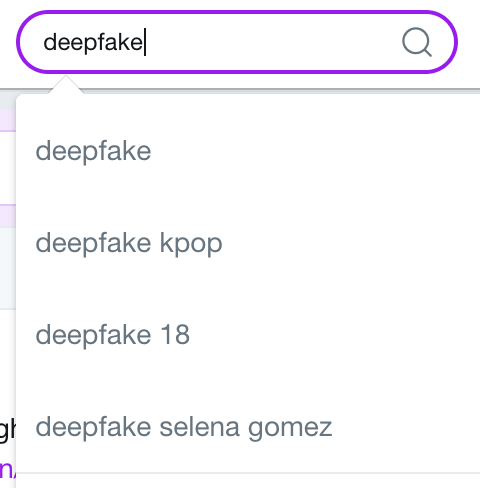

Twitter autocomplete when searching "deepfake"

Twitter autocomplete when searching "deepfake"

Questions and approaches across disciplines

Researchers across various fields have responded to issues raised by the proliferation of deepfakes. Legal scholars have discussed how the law might or might not provide deterrents or recourse in case of harms to individuals or threats to democracy (Chesney & Citron 2018). In the US, image privacy law is essentially a reappropriation of copyright law. Ownership of publicly available images becomes a relevant question. Right to publicity law, which is implemented at the state level and varies per state, is also relevant. Ideas from law about revenge porn may also apply.

Scholars have also discussed the difficulties that might arise as the existence of deepfake technology erodes trust in video, changing the role of authentication for videos treated as evidence in court (Maras & Alexandrou 2018).

In cognitive science, research suggests that “people have poor ability to identify whether a real-world image is original or has been manipulated” (Nightingale et al. 2017). This suggests a need for computer-aided techniques for image authentication.

Two broad classes of approaches have been proposed for this problem. One is based on verifying an image's integrity as it is taken, ensuring that its provenance can be determined as it circulates on the internet. Another approach is based on detecting modifications to an image.

The emerging field of automated provenance analysis seeks to use metadata to track the chronology of an image or video as it circulates online. There are startups seeking to create camera software that content producers can use to make images which journalists or end users can then verify at the point of reception. This is potentially useful for individuals and institutions intending to create trustworthy images. One could imagine a future in which it is expected of all journalism outlets to only publish verified images. Researchers have also explored approaches to inferring a provenance graph from pre-existing metadata, without the need for the active maintenance of a provenance chain from the point of production (Bharati et al. 2018). However, this does not prevent harms where the truth value of the image is irrelevant, like non-consensual porn.

The field of digital image forensics has produced methods for authenticating images by detecting modifications in the image itself. Classes of technique include: pixel-level anomaly detection, format-based statistical analysis of compression schemes, camera-based analysis of hardware-specific artifacts, physical anomaly detection between lighting and 3D objects, and geometric techniques based on object positions in the world (Farid 2009).

Recent advances in machine learning have enabled approaches that learn more general methods for detecting image manipulation, without requiring knowledge of specific methods of tampering (Huh et al. 2018, Bayar et al. 2016). In response to increasing public attention on deepfakes, new work has emerged on building datasets and detectors specifically for video of faces (Roessler et al. 2019, Li et al. 2018, Zhou et al. 2018).

There is interest in deepfake detection work not only in academia, but also in industry and in the defense sector. Gfycat, a company that hosts animated gifs, has reportedly attempted to develop in-house deepfake detection. DARPA has established the Media Forensics group to fund research in image and video manipulation detection.

The usefulness of detection-based approaches depends on their adoption by large social media platforms, in order to mitigate circulation of deepfakes at the point of distribution. This depends on the labor of human content moderators to distinguish between harmful and non-harmful deepfakes, which will be difficult at scale and require advances in forensic interface design.

Changes in the sophistication and accessibility of AI techniques have resurfaced old questions and raised new ones about how images of people should be treated. I look forward to seeing work from researchers across disciplines responding to these potential harms, as well as developing my ongoing research and writing on questions of consent, autonomy, labor, and data re-appropriation in deepfakes during my PhD.

References

- Ayyub, R. 2018. I was the victim of a deepfake porn plot intended to silence me. https://www.huffingtonpost.in/rana-ayyub/i-was-the-victim-of-a-deepfake-porn-plot-intended-to-silence-me-says-rana-ayyub_a_23595592/

- Bayar, B., and Stamm, M. C. 2016. A deep learning approach to universal image manipulation detection using a new convolutional layer. In Proceedings of the 4th ACM Workshop on Information Hiding and Multimedia Security, 5–10. ACM.

- Beres, D. 2018. Pornhub continued to host ’deepfake’ porn with millions of views, despite promise to ban. https://mashable.com/2018/02/12/pornhub-deepfakes-ban-not-working/#bLVv7q2UMPqR

- Bharati, A.; Moreira, D.; Brogan, J.; Hale, P.; Bowyer, K. W.; Flynn, P. J.; Rocha, A.; and Scheirer, W. J. 2018. Beyond Pixels: Image Provenance Analysis Leveraging Metadata. arXiv e-prints arXiv:1807.03376.

- Brandom, R. 2019. Deepfake propaganda is not a real problem. https://www.theverge.com/2019/3/5/18251736/deepfake-propaganda-misinformation-troll-video-hoax

- Chan, C.; Ginosar, S.; Zhou, T.; and Efros, A. A. 2018. Everybody Dance Now. arXiv e-prints arXiv:1808.07371.

- Chesney, R., and Citron, D. K. 2018. Deep fakes: A looming challenge for privacy, democracy, and national security.

- Cole, S. 2017. Ai-assisted fake porn is here and we’re all fucked. https://motherboard.vice.com/en_us/article/gydydm/gal-gadot-fake-ai-porn

- Cole, S. 2018. Deepfakes Were Created As a Way to Own Women's Bodies—We Can't Forget That. https://broadly.vice.com/en_us/article/nekqmd/deepfake-porn-origins-sexism-reddit-v25n2

- Cole, S. 2018. People Are Using AI to Create Fake Porn of Their Friends and Classmates. https://motherboard.vice.com/en_us/article/ev5eba/ai-fake-porn-of-friends-deepfakes

- Dale, K.; Sunkavalli, K.; Johnson, M. K.; Vlasic, D.; Matusik, W.; and Pfister, H. 2011. Video face replacement. In Proceedings of the 2011 SIGGRAPH Asia Conference, SA ’11, 130:1–130:10. New York, NY, USA: ACM.

- Ehrenkranz, M. 2018. How archivists could stop deepfakes from rewriting history. https://gizmodo.com/how-archivists-could-stop-deepfakes-from-rewriting-hist-1829666009

- Farid, H. 2009. A survey of image forgery detection. IEEE Signal Processing Magazine 26(2):16–25.

- Frenda, S.; Knowles, E.; Saletan, W.; and Loftus, E. 2013. False memories of fabricated political events. Journal of Experimental Social Psychology 49(2):280–286.

- Harwell, D. 2018. Scarlett Johansson on fake AI-generated sex videos: ‘Nothing can stop someone from cutting and pasting my image’. https://www.washingtonpost.com/technology/2018/12/31/scarlett-johansson-fake-ai-generated-sex-videos-nothing-can-stop-someone-cutting-pasting-my-image/

- Hao, K. 2018. Deepfake-busting apps can spot even a single pixel out of place. MIT Technology Review. https://www.technologyreview.com/s/612357/deepfake-busting-apps-can-spot-even-a-single-pixel-out-of-place/

- Huh, M.; Liu, A.; Owens, A.; and Efros, A. A. 2018. Fighting fake news: Image splice detection via learned selfconsistency. arXiv preprint arXiv:1805.04096.

- Jin, Z.; Mysore, G. J.; DiVerdi, S.; Lu, J.; and Finkelstein, A. 2017. VoCo: Text-based insertion and replacement in audio narration. ACM Transactions on Graphics 36(4):Article 96, 13 pages.

- Kim, H.; Garrido, P.; Tewari, A.; Xu, W.; Thies, J.; Nießner, N.; Perez, P.; Richardt, C.; Zollhofer, M.; and Theobalt, C. 2018. Deep Video Portraits. ACM Transactions on Graphics 2018 (TOG).

- Li, Y.; Chang, M.-C.; Farid, H.; and Lyu, S. 2018. In ictu oculi: Exposing ai generated fake face videos by detecting eye blinking. arXiv preprint arXiv:1806.02877.

- Liu, M.; Breuel, T.; and Kautz, J. 2017. Unsupervised image-to-image translation networks. CoRR abs/1703.00848.

- Lytvynenko, J. 2018. A belgian political party is circulating a trump deepfake video. https://www.buzzfeednews.com/article/janelytvynenko/a-belgian-political-party-just-published-a-deepfake-video

- Maras, M.-H., and Alexandrou, A. 2018. Determining authenticity of video evidence in the age of artificial intelligence and in the wake of deepfake videos. The International Journal of Evidence & Proof 1365712718807226.

- Matsakis, L. 2018. Artificial intelligence is now fighting fake porn. Wired. https://www.wired.com/story/gfycat-artificial-intelligence-deepfakes/

- Muncy, J. 2018. This video uses the power of deepfakes to re-capture this character’s cameo appearance in Rogue One. https://io9.gizmodo.com/this-video-uses-the-power-of-deepfakes-to-re-capture-th-1828907452

- Nightingale, S. J.; Wade, K. A.; and Watson, D. G. 2017. Can people identify original and manipulated photos of realworld scenes? Cognitive research: principles and implications 2(1):30.

- Purdom, C. 2018. Deep learning technology is now being used to put nic cage in every movie. https://www.avclub.com/deep-learning-technology-is-now-being-used-to-put-nic-c-1822514573

- Robertson, A. 2018. I’m using AI to face-swap Elon Musk and Jeff Bezos, and I’m really bad at it. https://www.theverge.com/2018/2/11/16992986/fakeapp-deepfakes-ai-face-swapping

- Rossler, A.; Cozzolino, D.; Verdoliva, L.; Riess, C.; Thies, J.; and Nießner, M. 2019. FaceForensics++: Learning to Detect Manipulated Facial Images. arXiv.

- Suwajanakorn, S.; Seitz, S. M.; and Kemelmacher-Shlizerman, I. 2017. Synthesizing obama: Learning lip sync from audio. ACM Trans. Graph. 36(4):95:1–95:13.

- Thies, J.; Zollhofer, M.; Stamminger, M.; Theobalt, C.; and Nießner, M. 2016. Face2Face: Real-time Face Capture and Reenactment of RGB Videos. In Proc. Computer Vision and Pattern Recognition (CVPR), IEEE.

- Turek, M. Media forensics (medifor). https://www.darpa.mil/program/media-forensics

- Zhou, P.; Han, X.; Morariu, V. I.; and Davis, L. S. 2018. Two-Stream Neural Networks for Tampered Face Detection. arXiv e-prints arXiv:1803.11276.